16

Cognitive Biases: How Your Beliefs Shape What You Say and Do

Ever noticed how people react differently to the same piece of news depending on what they already believe? Two coworkers hear the same report about company layoffs-one sees it as a smart move, the other as a disaster. Neither is lying. They’re just reacting based on hidden mental shortcuts called cognitive biases.

These aren’t just quirks of personality. They’re hardwired patterns in how your brain processes information. And they’re the reason why generic responses-like ‘I knew that would happen’ or ‘They’re just lazy’-keep popping up, even when they don’t make sense. Understanding these biases isn’t about becoming perfectly rational. It’s about spotting when your brain is playing tricks on you-and why that matters in everyday life.

How Your Brain Takes Shortcuts (And Why It Backfires)

Your brain didn’t evolve to analyze data like a spreadsheet. It evolved to keep you alive in a world full of predators, food shortages, and social threats. So it learned to take shortcuts. These shortcuts are called heuristics, and they’re usually helpful. But in today’s complex world, they often lead you astray.

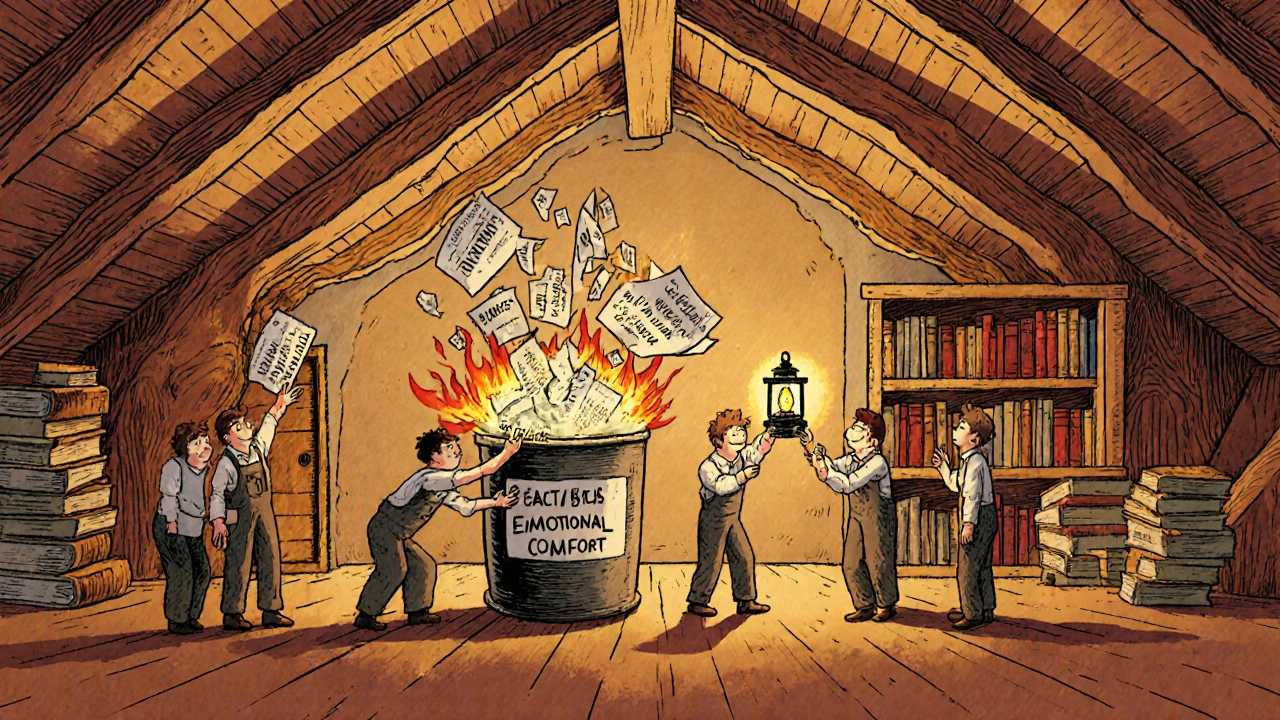

One of the most powerful is confirmation bias. It’s not just about seeking out information that matches your views. It’s about how your brain literally ignores or dismisses anything that doesn’t. fMRI scans show that when you encounter evidence contradicting your beliefs, the part of your brain responsible for critical thinking (the dorsolateral prefrontal cortex) shuts down. Meanwhile, the area linked to emotional comfort (the ventromedial prefrontal cortex) lights up. You’re not being stubborn-you’re being neurologically wired to feel safe with your existing beliefs.

This isn’t rare. A 2023 meta-analysis of over 1,200 studies found that 97.3% of human decisions are influenced by cognitive biases, often without us realizing it. That means almost every opinion you hear, every reaction you have, every judgment you make-was shaped by these invisible filters.

The Belief-Response Loop

Beliefs don’t just color your thoughts-they dictate your words before you even speak them. When someone says, ‘That’s just how they are,’ they’re not describing behavior. They’re activating a mental stereotype. That’s the fundamental attribution error: blaming someone else’s failure on their character, while excusing your own mistakes as bad luck or bad timing.

Studies show people rate others’ failures as 4.7 times more severe than their own. Imagine a manager whose team misses a deadline. If it’s their team, they blame ‘unforeseen market shifts.’ If it’s another team, they say, ‘They’re just disorganized.’ Same outcome. Different explanation. That’s bias in action.

And it’s not just about blame. The false consensus effect makes you assume everyone thinks like you. You post a hot take online and are shocked when people disagree. You think, ‘How can they not see this?’ But the truth? You’re not seeing the world clearly-you’re seeing it through the lens of your own beliefs. Research shows people overestimate how much others agree with them by an average of 32.4 percentage points.

Why This Matters in Real Life

These biases aren’t harmless mental glitches. They have real-world consequences.

In healthcare, diagnostic errors caused by cognitive bias account for 12-15% of adverse events, according to Johns Hopkins Medicine. A doctor who believes a patient is ‘anxious’ might dismiss chest pain as stress-when it’s actually a heart attack. Confirmation bias doesn’t just make you wrong. It can kill.

In the legal system, eyewitness misidentifications-often fueled by expectation bias-played a role in 69% of wrongful convictions later overturned by DNA evidence, according to the Innocence Project. Someone sees a suspect who matches their mental image of a ‘criminal’ and remembers details that weren’t even there.

Even in your wallet, bias is stealing money. A 2023 Journal of Finance study tracked 50,000 retail investors and found those with strong optimism bias-believing they’d avoid losses-earned 4.7 percentage points less annually than those who were more realistic. They held onto losing stocks too long, thinking ‘it’ll bounce back,’ and sold winning ones too early, afraid they’d lose gains. That’s not bad luck. That’s bias.

Why You Think You’re Less Biased Than Everyone Else

Here’s the twist: you think you’re immune.

Princeton psychologist Emily Pronin found that 85.7% of people believe they’re less biased than their peers. You’ve probably thought this yourself. ‘I’m fair. I weigh the facts.’ But research shows most people can’t even recognize their own biases. In one study, 75% of participants held unconscious biases that directly contradicted their stated values-like claiming to support gender equality but reacting slower to images of women in leadership roles.

This is called the bias blind spot. And it’s the biggest barrier to change. You can’t fix what you don’t admit exists. That’s why training programs often fail. People attend workshops, nod along, and go back to their old habits-because they don’t believe they needed it in the first place.

How to Break the Pattern (Without Becoming a Robot)

Can you fix this? Yes. But not by trying harder to be ‘objective.’ You can’t out-think your brain’s wiring. You need systems.

One proven method is the ‘consider-the-opposite’ strategy. Before you make a judgment, force yourself to list three reasons why you might be wrong. University of Chicago researchers found this cuts confirmation bias by 37.8%. It doesn’t mean you change your mind. It just means you stop jumping to conclusions.

Another tool is structured decision-making. In hospitals, doctors using a protocol that requires them to list three alternative diagnoses before settling on one reduced diagnostic errors by 28.3%. It’s not magic. It’s just slowing down the automatic response.

And then there’s feedback. IBM’s Watson OpenScale monitors AI decisions for bias patterns and flags them in real time. It works because it removes the ego from the process. You don’t have to trust your gut-you can trust your system.

The most effective change takes 6-8 weeks of consistent practice. That’s how long it takes to rewire automatic responses. It’s not about being perfect. It’s about building habits that interrupt bias before it shapes your words.

What’s Changing Now

This isn’t just psychology. It’s becoming policy.

The European Union’s AI Act, effective February 2025, requires all high-risk AI systems to be tested for cognitive bias. Companies that don’t comply face fines up to 6% of their global revenue. Why? Because biased algorithms make biased decisions-and those decisions hurt people.

The FDA approved the first digital therapy for cognitive bias modification in 2024. It’s not a pill. It’s an app that trains users to spot and correct automatic beliefs through daily exercises. And 28 U.S. states now require high school students to learn about cognitive biases as part of their curriculum.

Google’s ‘Bias Scanner’ API analyzes over 2.4 billion queries a month, flagging language patterns that reveal hidden assumptions. Even search engines are learning to detect how beliefs shape what people say-and what they leave unsaid.

These aren’t futuristic ideas. They’re responses to a growing problem: when beliefs control responses, societies fracture. Trust breaks down. Decisions get worse. And the people who suffer most are the ones who didn’t even know they were being manipulated-by their own minds.

You’re Not Broken. You’re Human.

Cognitive biases aren’t a flaw. They’re a feature of being human. Our ancestors survived because they made fast judgments. You’re just living in a world that demands slower, smarter ones.

The goal isn’t to eliminate bias. It’s to recognize it. To pause. To ask: ‘Is this my belief talking-or the truth?’

That small pause-between stimulus and response-is where change happens. It’s where you stop being a reactor and start being a thinker.

And that’s not just better for you. It’s better for everyone you interact with.

What’s the most common cognitive bias?

Confirmation bias is the most powerful and widespread. It makes you notice, remember, and favor information that supports your existing beliefs while ignoring or dismissing anything that doesn’t. It’s why two people can watch the same news report and walk away with completely opposite conclusions. Studies show it has the strongest effect size (d=0.87) among all cognitive biases.

Can you get rid of cognitive biases completely?

No-and you shouldn’t try. Biases are mental shortcuts that helped humans survive for millennia. The goal isn’t elimination, but awareness and management. You can reduce their influence by using tools like the ‘consider-the-opposite’ method, structured decision protocols, and real-time feedback systems. With consistent practice over 6-8 weeks, people can reduce bias-driven responses by up to 37%.

Why do I feel defensive when someone challenges my belief?

Your brain treats contradictory information like a threat. Neurological studies show that when your beliefs are challenged, the brain’s emotional centers activate while the rational analysis centers shut down. This isn’t about being closed-minded-it’s biology. Your brain is wired to protect your worldview because, evolutionarily, sticking to group beliefs kept you safe. Recognizing this helps you respond with curiosity instead of defensiveness.

How do cognitive biases affect work relationships?

They create unfair judgments. Self-serving bias makes you take credit for team wins but blame external factors for losses. The fundamental attribution error makes you assume a coworker’s mistake is due to laziness, while you excuse your own as a busy day. Managers with strong self-serving bias have 34.7% higher team turnover, according to Harvard Business Review. These biases erode trust and make collaboration harder.

Are cognitive biases the same across cultures?

Most are, but some vary. Self-serving bias is much stronger in individualistic cultures like the U.S. and UK, where personal achievement is emphasized. In collectivist cultures like Japan or South Korea, people are more likely to attribute failures to group or situational factors. But confirmation bias, hindsight bias, and the false consensus effect appear consistently across all studied cultures.

Can technology help reduce cognitive bias?

Yes. Tools like IBM’s Watson OpenScale monitor decision patterns and flag potential bias in real time. Google’s Bias Scanner analyzes language across 100 languages to detect belief-driven phrasing. The FDA-approved digital therapy from Pear Therapeutics uses guided exercises to retrain automatic responses. These aren’t perfect, but they provide external checks when your internal filters are unreliable.

jalyssa chea

November 18, 2025 AT 06:21confirmation bias is why my ex thought i was cheating just cause i liked a pic on instagram lol

Julie Roe

November 19, 2025 AT 22:58man i never realized how much my brain just auto-filters stuff until i started working with people from totally different backgrounds. i used to think people were just being stubborn when they disagreed with me, but now i see it’s like my brain has this little bouncer at the door of my beliefs, and it’s just not letting any new info in unless it’s wearing the right outfit. i’ve started forcing myself to read one article a week from a source i totally disagree with, and honestly? it’s uncomfortable but kind of freeing. like my brain’s getting a workout. not saying i’ve changed my mind on anything big yet, but i’m at least noticing when i’m about to roll my eyes at someone and instead i pause and go ‘wait, is this me or is this just my brain being lazy?’

Gary Lam

November 21, 2025 AT 22:55so you’re telling me my uncle who thinks the moon landing was faked is just… neurologically wired to feel safe? cool. so now i just gotta nod and say ‘wow that’s fascinating’ and hand him a tinfoil hat with a side of empathy? i’ll take it. at least now i can blame his brain instead of his personality. also, google’s bias scanner is basically just a fancy way of saying ‘we’re watching what you type and judging you’ lol

Peter Stephen .O

November 23, 2025 AT 14:39bro this is the most real thing i’ve read all year. it’s like your brain is a TikTok algorithm for your beliefs-show you more of what you already like, drown out the rest, and make you feel like you’re the only one seeing the truth. i used to think i was just ‘right’ about everything, but now i catch myself thinking ‘oh they’re just lazy’ about coworkers and then i’m like… wait, didn’t i miss my deadline last week because i was binge-watching cat videos? yeah. exactly. i’ve started saying ‘what’s another way to see this?’ before i open my mouth and now people actually listen to me. weird, right? turns out being a little less sure of yourself makes you way more interesting. also, 6-8 weeks? i’m in. let’s rewire this thing

Andrew Cairney

November 23, 2025 AT 18:43THEY’RE USING AI TO MANIPULATE WHAT WE THINK. GOOGLE’S BIAS SCANNER? THAT’S NOT A TOOL-IT’S A WEAPON. THEY’RE TRAINING US TO QUESTION OUR OWN THOUGHTS SO WE’LL RELY ON THEIR SYSTEMS INSTEAD. THE FDA APPROVING A ‘THERAPY’ FOR BIASES? THAT’S STEP ONE. STEP TWO IS MICROCHIPS IN OUR BRAINS TO FILTER ‘UNSAFE’ BELIEFS. I SAW A DOCUMENTARY ON THIS. THEY’RE CALLING IT ‘COGNITIVE CLEANSE.’ THEY WANT US TO THINK THE SAME. DON’T FALL FOR IT. YOUR BELIEFS ARE YOURS. DON’T LET THE ALGORITHMS TELL YOU WHAT TO BELIEVE.

Rob Goldstein

November 25, 2025 AT 17:48the neurobiology here is spot on. the dorsolateral prefrontal cortex suppression under belief threat is a well-documented phenomenon in cognitive neuroscience-this isn’t speculation, it’s fMRI-validated. what’s often missed is the role of the anterior cingulate cortex in detecting cognitive dissonance; it fires before the emotional centers even activate, meaning we feel the discomfort of contradiction before we consciously process it. that’s why the ‘consider-the-opposite’ heuristic works-it gives the ACC a structured outlet. pairing that with feedback loops like IBM’s Watson OpenScale creates a closed-loop cognitive correction system. most people don’t realize that bias mitigation isn’t about willpower-it’s about creating external scaffolding for internal processes. this is applied neurocognitive engineering, folks.

vinod mali

November 27, 2025 AT 02:50in my country we dont talk about bias much but i see it every day. people blame others for everything but never themselves. i think this article is true. i try to listen more now. not to reply. just to listen. its quiet but helps

Jennie Zhu

November 28, 2025 AT 11:01While the conceptual framework presented in this exposition is both intellectually compelling and empirically substantiated, one must exercise caution in the operationalization of bias mitigation protocols. The purported efficacy of the 'consider-the-opposite' heuristic, while statistically significant in controlled laboratory environments, exhibits limited ecological validity in high-stakes organizational contexts. Furthermore, the conflation of neural activation patterns with motivational agency risks anthropomorphizing neurophysiological processes. A more rigorous epistemological stance would require longitudinal, cross-cultural validation prior to institutional adoption. That said, the integration of algorithmic audit systems into decision-making architectures represents a promising, albeit nascent, frontier in cognitive accountability.

Kathy Grant

November 28, 2025 AT 16:18there’s something so haunting about realizing your brain is a storyteller, not a scientist. you don’t see the world-you see the plot you’ve already written. and the worst part? you don’t even know you’re the author. i used to think my opinions were just… mine. like they grew out of me naturally. but now i feel like i’m walking around with a thousand invisible scripts running in my head, and every time someone says something that doesn’t fit, my mind just auto-skips to the next line. i’ve started writing down my first reaction to things-just one sentence-and then asking myself, ‘is this me or is this the story i’ve been told?’ it’s not about changing your mind. it’s about knowing which voice is speaking. and sometimes… the quietest voice is the one that’s been screaming the loudest.

Robert Merril

November 28, 2025 AT 18:51lol the whole article is just a fancy way of saying stop being an idiot. i mean yeah confirmation bias is real but also maybe people just know stuff. also google's bias scanner is just google trying to make sure you dont say anything they dont like. and the 6-8 week thing? i tried it for 3 days and then went back to being right about everything. also typo in the first paragraph its fMRI not fMRI

Noel Molina Mattinez

November 30, 2025 AT 12:00you think your brain is biased but what if the system is rigged? who decides what bias is? who made the rules? the same people who control the algorithms and the media and the schools and the apps that tell you to 'consider the opposite'-but only if it’s the opposite they want you to consider. they want you to doubt yourself so you’ll trust them more. this isn’t about bias. this is about control. they’re not fixing your mind. they’re reprogramming it

Roberta Colombin

December 1, 2025 AT 00:53thank you for writing this with such care. it’s rare to see a topic like this explained without making people feel ashamed. we all have these shortcuts-they kept our ancestors alive. the goal isn’t to be perfect. it’s to be kinder. when you realize your brain is filtering everything, you start listening differently. you pause before you judge. you give people space. and that small space? that’s where connection happens. not in being right. in being present.

Dave Feland

December 2, 2025 AT 18:55the entire premise is a liberal technocratic fantasy dressed in neuroscience jargon. cognitive biases? of course they exist-but this article assumes the existence of an objective, unbiased truth that can be accessed by those who submit to algorithmic governance. the fact that the EU is mandating bias audits in AI reveals a deeper pathology: the ruling class’s fear of dissent. if your beliefs are deemed 'biased,' then your voice is silenced. this isn’t psychology-it’s thought reform disguised as education. the FDA-approved app? a digital thought police. and the schools teaching this? they’re not teaching critical thinking-they’re teaching compliance. the real bias? believing that experts know better than you what you should believe.